What is A/B testing?

What is A/B testing?

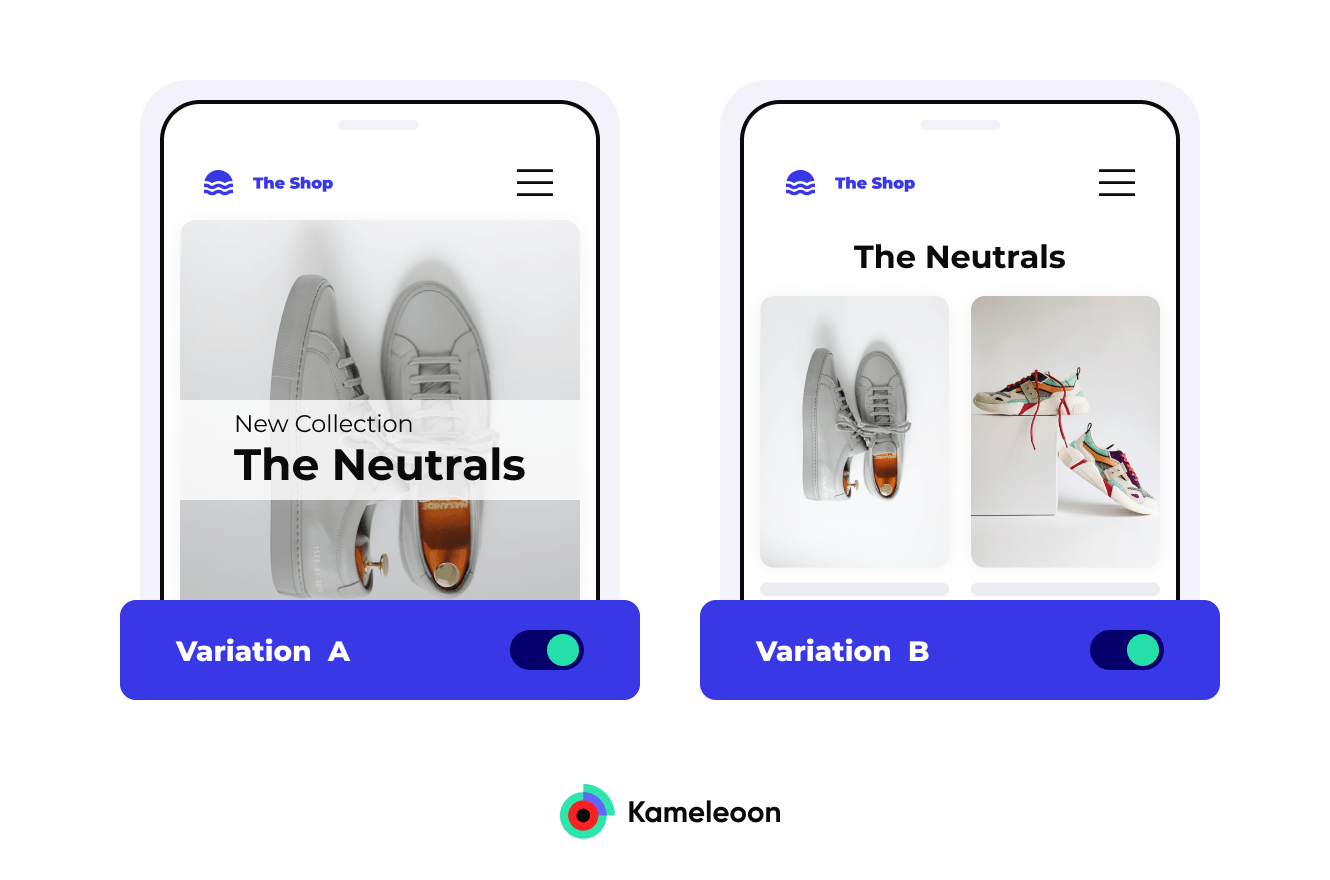

A/B testing is an online experimentation process that helps you determine which of two or more assets, like a web page or a page element, performs best according to whatever measurable criteria you want to set.

Put simply, A/B tests allow you to see which variation works better for your audience based on statistical analysis.

To conduct an A/B test, you:

- Randomly divide your traffic into two or more equal groups.

- Show those groups different variations of your assets for a set period.

- Compare the relative performance of each version in terms of your metrics, such as conversions or sales.

- Analyze the results to determine if the changes are worth implementing permanently

What are the benefits of A/B testing?

There are many benefits to implementing A/B testing at your organization. We’ll break down five reasons below.

Increased conversions

By testing different variations, you can optimize your website continuously to improve the visitor experience and general conversion rate.

Better audience engagement

A/B testing will help you give your audience an exceptional user experience to engage them around your brand and retain them over the long term.

Improved visitor insights

Analyze how the different elements of your pages impact your visitors’ behavior and learn more about their needs and expectations.

Decisions based on quantified results

A/B testing your hypotheses helps you reduce all risk factors so you can make decisions based on reliable data and statistics rather than subjective assessment.

Optimized productivity and budgets

Use what you learn from your A/B tests to channel your effort and budget into what works best for every audience segment.

With A/B testing, you can answer these questions with confidence:

- Which elements drive sales and conversions or impact user behavior?

- Which steps of your conversion funnels are underperforming?

- Should you implement this new feature or not?

- Should you have long or short forms?

- Which title for your article generates more shares?

How do different teams use A/B testing?

A/B testing is valuable in part because of how flexible it is. As a result, many different teams can generate meaningful insights from well-constructed A/B tests.

Marketing teams

Marketing teams often use A/B tests to improve the performance of their campaigns across a variety of channels.

Marketing teams can test which of several different calls to action perform best on an underperforming landing page. Or they could test different user experiences to determine which boosts KPIs the most.

Product teams

A/B tests are an excellent tool for improving user retention and engagement when it comes to a product.

Product teams can test different onboarding processes to see which leads to customers hitting more milestones. You can also test different versions of features when they’re introduced. This process is called feature experimentation and it’s growing in popularity amongst product-led organizations.

Growth & experimentation teams

Growth teams can easily use A/B tests to evaluate the customer journey they’ve constructed.

Growth and experimentation teams can test which landing page variations lead to the highest number of new registrations. Or they can evaluate which product page designs lead to the fewest abandoned carts.

Types of A/B testing

Let’s break down some of the most common types of A/B testing used by teams today.

Split testing

Split testing is a specific type of A/B testing where you send test audiences to different, or split, URLs. The split is hidden from those audiences.

Split testing is sometimes used synonymously for A/B testing, but your audiences see different versions of the same URL in classic AB testing.

Multivariate testing (MVT)

Multivariate testing (or MVT) is an A/B testing methodology that lets you test multiple variations of an element (or more than one element) at the same time during an experiment.

For example, you could test combinations of headers, descriptions, and associated product videos. In this case, you have multiple page or app variants generated to try all the different combinations of these changes to determine the best one.

The big downside of multivariate testing is that you need an enormous amount of traffic for results to be statistically meaningful.

Therefore, before starting a multivariate testing project, you should check that your test audience is large enough to provide representative results.

A/A testing

A/A tests enable you to test two identical versions of an element. The traffic to your website is divided into two, with each group exposed to the same variation.

A/A testing can help to determine whether the conversion rates in each group are similar and confirm that your solution is working correctly. It is also a good way to identify bugs and outliers that impact results thereby raising the level of trust in your experiments.

Multi-armed bandit testing (Dynamic traffic allocation)

Multi-armed bandit testing—often called dynamic traffic allocation—is when your algorithm automatically and gradually redirects your audience toward the winning variation.

The winner is determined by whatever metrics you defined at the outset of your test.

Feature testing

Feature testing—or feature experimentation—involves building multiple feature versions in your codebase.

While you may need developers to write features for you, some no-code or low-code feature management tools allow non-technical users, like product managers, to build feature experiments independently.

When you set up a feature test, you create a feature flag in your code, which routes users to different code versions. Feature testing is a middle ground in difficulty between implementing a client-side test and a server-side test.

Since features can modify anything in your codebase, you can test practically anything you can test with server-side testing with feature experimentation.

A/B testing statistical models

A/B testing is based on statistical methods. While you don’t need to know all the math involved to generate meaningful results, basic statistics knowledge will help you build better-quality tests. A/B testing solutions use two main statistical methods. Each is appropriate for different use cases.

Frequentist method

The frequentist method produces a confidence level that measures the reliability of your results. For example, with a confidence level of 95 percent or more, you have a 95% chance of it being accurate.

The downside to the frequentist method is it has a "fixed horizon,” meaning that the confidence level has no value until the end of the test.

Bayesian inference method

The Bayesian inference method provides a result probability when the test starts, so there is no need to wait until the end of the test to spot a trend and interpret the data.

The challenge with the Bayesian method is you need to know how to read the estimated confidence interval given during the test. With every additional conversion, the trust in the probability of a reliable winning variant improves.

Frequentist vs. Bayesian: How do you choose?

Choosing between frequentist and Bayesian comes down to how much you know about similar experiments you’ve conducted in the past, and how confident you are that your new experiment is truly similar to those past ones.

Suppose you’re experimenting with a similar user population, over a similar time period, on the same feature as in previous tests. In that case, a Bayesian analysis may give you good results faster.

On the other hand, if you’re testing a new feature for the first time or with a different user segment, those priors may not be relevant. The less similar a test is to a previous one, the better off you are starting fresh with a pure frequentist analysis.

Server-side vs. client-side A/B testing

Generally, you can run your test on two sides of your network: server-side or client-side.

- Client-side testing requires less technical skills, making it well-suited for digital marketers, growth teams, and experimentation programs just getting started with A/B testing. It enables teams to be agile and run experiments quickly, avoiding bottlenecks, so they receive test results faster.

- Server-side testing requires technical resources and often more complex development support. However, it does enable more powerful, scalable, and flexible experimentation.

Which approach you choose for a given test will depend on the company structure, internal resources, your development lifecycle, and the complexity of the experiments.

Client-side testing

A client-side test modifies web code directly in the end user’s browser. Web code for the test subject page is forwarded from the server to the end user's browser, where a script in the code determines which version the user will see.

It does this on the fly regardless of the browser—Chrome, Firefox, Safari, Opera, etc.

Pros of client-side testing

- Easy to get up and running with few technical skills required.

- Can be used to quickly deploy many types of front-end tests and personalizations often using a WYSIWYG graphical editor.

- Doesn’t require developer resources or backend code.

- Allows brands to run a wide variety of complex experiments including multivariate testing and personalization across multiple pages.

- Easier to hire agencies and CRO practitioners to run client-side tests.

- Can easily build tests based on browser data.

Cons of client-side testing

- Other experimentation options are limited.

- Cannot test features, back-end logic, or algorithms client-side.

- Cannot be used for mobile application testing.

- May cause a flicker effect if the snippet is poorly constructed or installed.

- Can slow down your page load performance.

- Data privacy measures, such as Apple’s ITP, can limit what data experimenters can collect and rely on since they restrict web visitor access.

- The WYSIWYG graphic editor may struggle to function properly on a single-page application (SPA) if poorly built.

Server-side testing

When you run tests server-side, different test versions are created on the back-end infrastructure, as opposed to the visitor’s browser.

Pros to server-side testing

- Increased control over the elements of your tests and experiments.

- More freedom with what you can test, including elements that require back-end database connections or behind-the-scenes access to your code.

- Eliminates the flicker effect of testing.

- Allows for mobile app experimentation.

- No impact on page load speed or performance.

- Can act as a hedge against possible client-side complications due to restricting website visitor data.

Cons to server-side testing

- Requires developer resources to design and code tests. These technical resources are often constrained and reliance on them can hold testing programs back.

- Requires developers to configure and maintain third-party integrations for precise audience targeting using external segments or to sync experimentation data to other platforms.

- Need to maintain software development kits (SDKs) or use an experimentation platform that does that for you.

- Cannot be easily outsourced because it requires a developer who understands your site’s architecture and engineering.

- Limits what you can do in terms of targeting, as it’s difficult to access and use visitor in-session i.e., browser data to build A/B tests.

The hybrid testing approach: Bringing together the best of client-side and server-side testing

A third option allows experimenters to enjoy client-side capabilities even when running server-side tests. In hybrid experiments, users get the best of both approaches.

Hybrid experimentation uses both client-side (JavaScript) and server-side (SDK) capabilities to make running experiments more valuable and easier for all types of teams. With a hybrid model, teams can run server-side experiments without eating up valuable developer resources.

Hybrid experimentation can enable marketers, product managers, and developers to:

- Work in a single unified platform, using tools familiar to all of them.

- Better manage tracking and reporting.

- Avoid increased dependency on the dev team to write new scripts and maintain integrations to external tools.

- Improve overall efficiency and increase cross-team collaboration around experimentation.

Kameleoon is the only hybrid experimentation platform that lets you create server-side experiments with client-side capabilities and plugs into your existing tech stack for in-depth analysis and activation.

What can you A/B test on your website or app?

You can test practically anything about your website, app, or customer journey. The question is just how you test it.

As we’ve explored, some tests are better run client-side and others server-side.

Client-side testing ideas

Client-side testing is great for testing elements that render in a customer’s browser, including

- Page titles

- Banners

- Copy blocks

- Different CTAs

- Images

- Testimonials

- Links

- Product labels

Server-side testing ideas

Anything you can test client-side, you can also test server-side. You’ll just need more developer support to maintain your testing environment. Or you might construct a hybrid experimentation environment where you conduct different experiments.

The tests you can only run server-side tend to involve parts of your back-end IT infrastructure not accessible by a customer’s browser. If you’re testing an app that doesn’t render on a user’s device each time you load it, your options are mostly restricted server-side.

Server-side A/B tests can involve versions of:

- Algorithms

- Omnichannel experiences

- Dynamic content, especially when it loads from external sources

- Database performance adjustments

Tests you construct server-side can help you avoid tracking restrictions imposed by apps on user devices, such as Apple’s Intelligent Tracking Prevention (ITP) feature on iOS devices.

Adopting A/B testing in your company

Adopting A/B testing in your company requires a top-down approach. Here are three things to keep in mind to help you get your experimentation program off the ground.

1. Build a culture of experimentation

One of the best long-term strategies for encouraging A/B testing in your organization is to foster a culture of experimentation. Building a team culture that values experimentation and responsible data stewardship is essential if you want to build personalized, optimized experiences for all of your customers.

Kameleoon has its own stable of in-house A/B testing experts, but specialists worldwide are available to help inform your testing program. We’ve highlighted many of the leading lights in the A/B testing field in our Kameleoon Expert Directory.

Adopting a culture of experimentation is crucial if you are to put in place an effective A/B testing strategy on your website.

2. Surround yourself with the right people

Building a strong CRO team is key to achieving success with your A/B testing program. Here are a few roles you’ll want to hire for:

- Personalization specialists and project managers structure the strategy and manage the project by coordinating the different profiles and resources.

- Developers handle the integration and the technical aspect of the experiment.

- Designers with solid UX knowledge to create personalized experiences and interfaces adapted to visitors’ needs.

3. Create diverse A/B testing programs

To set up your dedicated experimentation team, you can draw inspiration from the three main types of organizational structures that exist in companies today:

- A centralized structure that drives the A/B testing strategy for the entire company and prioritizes experiments according to each team’s needs.

- A decentralized structure with experts in each team to run several projects simultaneously.

- A hybrid structure with an experimentation unit and experts in each team.

How to build an A/B testing program

Small to large organizations with different levels of resources can conduct meaningful A/B tests. They just need to follow a reliable plan.

Here are seven steps for putting your testing strategy in place.

Step 1: Get executive buy-in

Experience shows that getting your organization’s leadership on board with a new testing program will greatly increase your chances of retaining test resources long-term. Start by getting executive input on what metrics you can test against that will support the company’s strategic goals—in other words, what’s on your executive’s radar.

If your executives are skeptical, consider having them propose their hypothesis for you to test, then work to show them the results. Successful or not, with good analysis, you’ll be able to show them meaningful results from your initial A/B tests.

Step 2: Measure and analyze your website’s performance

The next step is taking some time to identify what you can optimize. Every website is different, so brands must develop their strategy based on their audience's behavior, their goals, and the results obtained after analyzing their website performance.

To identify friction points on your website, you can use behavioral analysis tools such as click tracking or heatmaps.

Step 3: Formulate your hypothesis

Once you’ve identified the friction points stopping your visitors from converting, formulate hypotheses you can test with an A/B test.

A hypothesis is an educated guess you make about a customer's behavior. All good A/B tests are designed based on evidence-based hypotheses.

Here is how you can generate an experiment from a hypothesis:

- Observation: The sticky bar you installed is rarely used by website visitors.

- Hypothesis: The icons need to be clearer. Adding context could improve this weak point.

- Planned experiment: Add wording below each icon.

Step 4: Prioritize your A/B tests and establish your testing roadmap

It is vital to prioritize your actions to implement an effective A/B testing roadmap and obtain convincing results.

With the Pie Framework, created by WiderFunnel, you can rank your test ideas according to three criteria rated 1 to 10 to determine where to start:

- Potential: On a scale of 1 to 10, how much can you improve this page?

- Importance: What is the value of the traffic (volume, quality) on this page?

- Ease of implementation: How easy is it to implement the test (10 = very easy, 1 = very difficult)?

You’ll know which tests to launch by totalling the three grades.

Step 5: Test

Once you’ve finished preparing, it’s time to start your test. Remember, don’t edit your test once it’s in progress. Instead, let it run to completion.

Step 6: Analyze your A/B test results

It’s crucial to analyze and interpret your test results. After all, A/B testing is about learning and making decisions based on the analysis of your experiments.

For effective analysis of your results:

- Learn to recognize false positives

- Establish representative visitor segments

- Don’t test too many variations at the same time

- Don’t give up on a test idea after one failure

Step 7: Repeat

Once you’ve collected and analyzed results, you need to take what you’ve learned about your customers and their journey and use those findings to formulate your next round of tests.

A/B testing and experimentation strategy are all about building feedback loops. Findings lead to more findings, optimization leads to further optimization, and rising customer engagement leads to more engagement.

Once your last round of testing is done, work on the next and keep the momentum going.

Tips to improve your A/B testing program

One of the most effective ways to improve an existing experimentation program is to design tests for specific audience segments. If you’re testing your entire audience, then what you’re really testing is the average—a middle-of-the-road idealized customer who doesn’t actually exist.

Obviously, testing each individual customer isn’t feasible. But the quality of your results will improve significantly if you break your testing audience into some common sense segments.

Some possible ways to begin segmenting your audience include:

- By demographics

- By geography

- New vs. returning visitors

- Mobile device vs. desktop users

Each of these segments will have its own motivators. Targeting them will reveal more meaningful insights into their behavior and buying patterns. Here are a few best practices to follow when you optimize your testing process to work with different segments.

Start simple

Don’t go overboard with overly granule segments. Instead, start with some common sense divisions for testing, like the higher-level segments suggested above.

For example, new vs. returning visitors is an easy segmentation most product managers can make in their data.

Refine your segments

Experimentation is an iterative process, meaning you must take it step by step. Once you have meaningful results for these higher-level segments, you can go more granular and try to discover important nuances about smaller and smaller ones.

Favor recent data

Business moves fast. Your experimentation data should move even faster.

Someone who visited your site for a single, long session 90 days ago isn’t going to make a good comparison to someone who visited your site three times this week.

Make sure your segments consider visitors using your service within the same time frame, and ideally, ensure that the time frame is as recent as possible.

Common A/B testing mistakes and how to avoid them

A/B testing can offer great value and is easy for digital business professionals to pick up. But there are some pitfalls you can encounter when first starting out.

Here are some of the most common challenges first-time A/B testers might face and how to overcome them.

Lack of research

Even your very first test requires some research. Your tests need to be grounded in understanding user behavior backed up by existing data.

That data could be something as basic as straight conversion rates, page visits, or unique user counts, but you should structure even the smallest, earliest tests based on some amount of data and not just pure intuition.

Too small sample sizes

A test with too few participants won’t be statistically significant. In other words, you won’t collect enough data for any results to be truly meaningful. Testing is only effective when you can tap into a meaningful sample of unique visits.

The specific sample size you need will depend on a few factors including:

- Total site visitors

- Current conversion rate

- Minimum detectable effect (MDE) you expect to see—or the minimum change in user behavior you hypothesize your test version will generate

Altering settings and parameters mid-test

It happens to everyone: you launch a test and then a few days later realize you could have structured it better. However, making modifications mid-test will compromise the quality of your results because you’ll no longer be exposing users to the same testable conditions.

Instead, accept that there will never be a perfect test. Just focus on getting reliable results from your current test, analyze the results, and make the next test even better.

Not checking for Sample Ratio Mismatch (SRM)

Sample ratio mismatch (SRM) is when one variation unintentionally receives far more traffic than the other in any given test.

If your sample ratio is not balanced, your results won’t be significant.

Fortunately, you can use the goodness of fit test on your sample sizes to confirm that they’re close enough to generate statistically significant results. You can also use Kameleoon’s SRM checker to ensure your results are free from any SRM.

Not considering Intelligent Tracking Prevention (ITP)

Apple’s Intelligence Tracking Prevention (ITP) limits first and third-party cookie storage to seven days, overriding the default expiration dates set by platforms like Google Analytics. A user visiting on a Monday who returns next on Tuesday of the following week will be treated as a wholly new visitor.

If you don’t account for ITP, your new visitor metric in Google Analytics will be completely inaccurate for any Safari traffic. Many other metrics will also be distorted, such as the number of unique visitors or the time to buy.

Look for an experimentation platform that uses Local Storage (LS) for cookies, like Kameleoon. This will bypass ITP restrictions and will give you better data about your entire visitor population.

Incorrectly analyzing test results

It doesn’t matter whether your test succeeded. If you don’t take the time to analyze your results and understand why your A/B test succeeded or failed, you’re not actually improving anything.

When you think about it this way, anytime you generate statistically significant results and take the time to analyze them, the test is a success because you’ll learn something new about your users.

It doesn’t matter if your variant didn’t convert more. If you understand why it didn’t, you’ve improved your understanding of your customer experience.

Not developing a culture of experimentation

One heroic individual cannot support an entire experimentation program. It takes top-down and bottom-up support throughout the organization.

Your marketing, product, design, IT, and other teams must all pull in the same direction. It sometimes takes a single champion to start the program, but experimentation programs take time to show their value.

To sustain your efforts, you must build a culture of testing and experimentation within your organization.

What to look for in an A/B testing platform

When evaluating different A/B testing platforms, you want to look for the ones that support your testing process as much as possible. Of course, individual technical features are important, but experimentation is a rigorous process, and you need process-oriented tools.

To help you evaluate different testing solutions, we’ve broken down essential features by the points in the testing process where you need them. Look for a testing solution that addresses as many of your own team’s pain points as possible.

Goal Setting

As discussed in our A/B testing strategy section, before you configure any specific tests, you first need to get buy-in from senior leadership and lay out your goals for your testing program. This stage is crucial for ensuring that your tests target the right visitors effectively.

What user experience do you want to improve? For example, you can test A and B variant call-to-actions on a landing page. In that case, what KPIs do you want to measure? Set user engagement goals (the number of clicks on the CTA) and see which variant performs best.

A/B testing platform features to consider

- Wide selection of measurable test KPIs: clicks on a particular page element, scrolls per page, page views, retention rate per page, time spent on the website, or the number of pages viewed.

- The ability to create customized goals: personalized e-commerce transactions including attributes like transaction ID, order amount, and payment method.

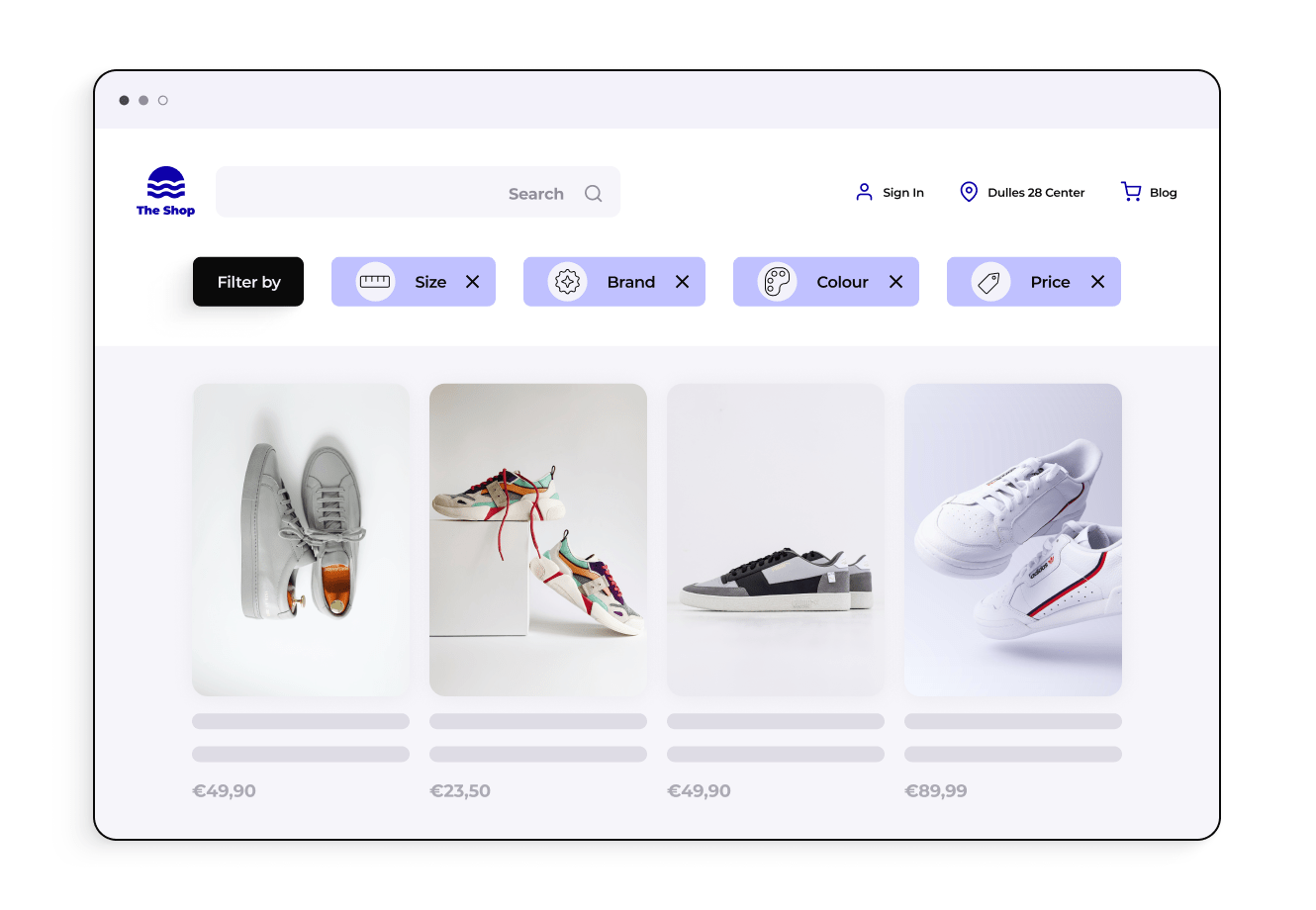

Advanced audience segmentation

You must know your audience well to conduct high-quality testing on your website or app. You typically don’t want to carry out tests on your entire audience because, as we’ve discussed, that means you’re testing a watered-down average visitor profile that isn’t very meaningful.

Therefore, you need to be able to segment your visitors before you conduct A/B tests.

A/B testing platform features to consider

- Real-time visitor data collection

- A targeting engine that can segment visitors based on behavioral, technical, or contextual criteria

- Segment creation based on data source: landing page, referring website, or type of traffic

- Segment creation based on digital footprint: browser language, type of device, IP address, screen resolution, or browser version

- Segment creation based on browser history: new vs. returning visitors, or type of customer

- Segment creation based on date and time, or other external information

- Segment creation based on data provided through app integrations

- GDPR-compliant segmentation

Experimentation triggers

A/B tests need to be activated by certain user behaviors or browser events. In experimentation, these are called triggers. Therefore, you need an experimentation solution that can trigger according to how your users interact with your service.

A/B testing platform features to consider

- Page triggers: the number of page views, time spent on site, and element presence on page

- Generic triggers: for example, mouse-out events and other specific API events

Easy to create elements

Once you’ve set the goals, identified the target audience segments, and the triggers for your A/B test, you’re ready to build the experiment itself. That means creating the graphics, setting the right parameters, and managing its launch.

Your A/B testing solution must allow you to easily create versions of the web elements you want to test.

A/B testing platform features to consider

- An easy-to-use graphic editor for creating custom elements

- Ability to activate the editor as a WYSIWYG overlay on any page, online or offline

- Ability to browse your site pages like a user to verify experience

- Automatic detection of element types (e.g., block, image, link, button, form)

- Contextualized menu with options appropriate to each element type

- Alternate developer coding interface supporting CSS and JavaScript

- Widget library

- A simulation tool to check test parameters, such as how variants display, targeting, KPIs, and potential conflict with other experiments

- Integrations with major martech vendor categories, like analytics, CDP/DMP, automation, consent, CMS, and email

Advanced test building & management

A/B tests can be simple, like experimenting with the color of the CTAs on your product pages.

However, they can also be much more complex, such as a test on your entire conversion funnel. You could also use your experimentation platform for other important, associated functions, such as dynamically managing traffic distribution to avoid Sample Ratio Mismatches.

Some experimentation teams want to run technical or large-scale projects with their development teams internally. In this case, relying on a testing solution with robust development and integration features is important.

Server-side test management is essential to some teams’ testing strategies. Server-side and hybrid testing services test your optimization hypotheses on back-end infrastructure and code rather than in your users’ browsers. And yet many tools don’t offer it.

A/B testing platform features to consider

- Multivariate test management

- Multi-site and multi-domain management options

- Custom Attribution Window to properly attribute conversions based on your unique sales cycle

- Traffic allocation algorithm management (For linear or multi-armed bandit testing)

- Test scheduling

- Feature flagging, which allows you to roll out different features based on rules, schedules, or KPIs

- Estimated test duration function

- Server-side test management

- Logic caching

- Full stack development tools (REST API and SDK library, developer portal)

- Automatic application management

- SDKs

- API library

Simplified problem management

No experiment ever goes off without a hitch, and that’s okay. What matters is that you have the necessary tools to manage problems when they inevitably arise.

Improperly built tests might negatively impact your entire site’s performance. To mitigate the chance of this happening, you should choose a solution that has an optimized architecture for A/B testing.

A/B testing platform features to consider

- No flicker generation

- ITP management to conduct A/B tests around Apple’s reduced cookie lifespan

- Automatic management of user consent

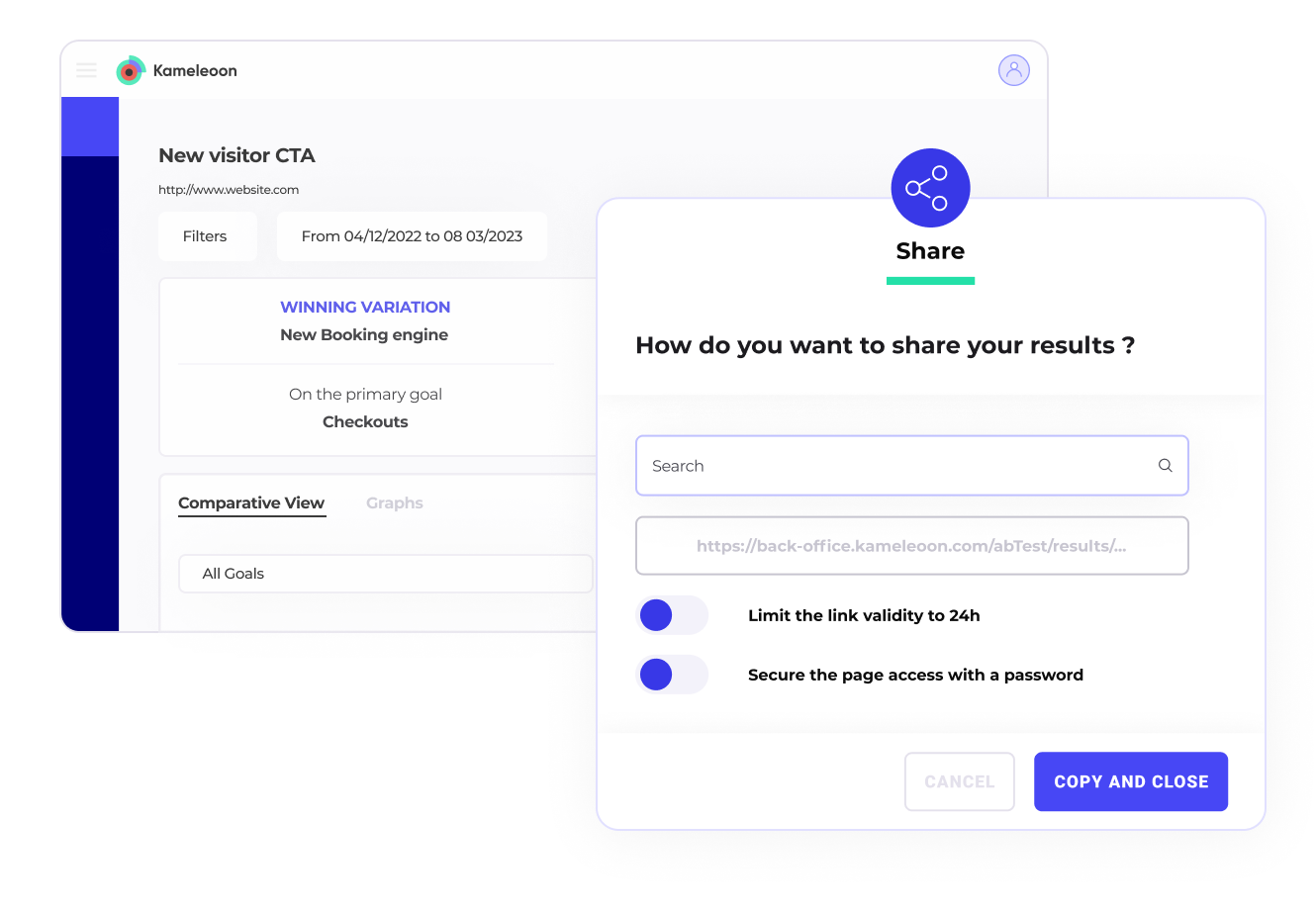

Easy to analyze results

Your job isn’t done once data is collected. Next is the truly important part: analyzing your results.

You need to look at results, decide what changes need to be made to your website, and update your conversion rate optimization roadmap. To do these things, you need easy-to-understand and customizable reporting tools to track the test results that matter to you.

A/B testing platform features to consider

- A/B testing reporting tool with key performance indicators (KPIs)—for example, calculation of conversion improvement or associated accuracy rates

- Statistical analysis options—for example, frequentist or Bayesian, by visitor or by visit, by converted visit, or for all measured conversions

- Data visualization tools, including interactive graphics, time periodization, and zoom

- Personalized reports

- Test criteria filters

- Comparative view of multiple periods

- Sharing tools—for example, an export feature or URL sharing

- Alert creation based on customizable business criteria

- A decision support system

- Integration of third-party analysis solutions

Support services for your entire testing process

No one should go it alone when conducting experiments. As we mentioned earlier, testing isn’t a time for heroics. You need broad organizational buy-in and the proper supporting tools and services. Customer support from your solution provider is a critical component.

Everyone’s support requirements will differ depending on their brand's maturity and testing program. That’s why working with a provider that can adapt to your specific needs is important.

A/B testing platform features to consider

- For more mature companies, the ability to function independently with self-service support tools, like training via tutorials, and permanent access to the helpdesk

- A dedicated Customer Success Manager assigned to your account able to provide you with strategic support

- A dedicated Technical Account Manager is assigned to your account for more technical projects

- The ability to collaborate with a wide network of agencies

Running privacy-compliant A/B tests

Many industries operate under data privacy regulations. Being in finance, healthcare, or education doesn’t mean you can’t run A/B tests on your customer data. It just takes extra preparation.

The same goes for companies working under data sovereignty laws, like the European Union's (EU) GDPR or California’s CCPA.

Companies working under these restrictions can and should optimize their digital services. Here are some of the key things you need to know about how to run privacy-compliant A/B tests.

- Any experimentation is better than zero experimentation

- Building a culture of experimentation might be even more important in regulated sectors

- A/B testing does not need to use personally identifiable information (PII)

- Use experimentation tools designed to work with PII

Regulations often force design, product, and marketing teams to be risk-averse. But, the trick is finding acceptable risks to take experimenting with their customer experience.

Get started with A/B testing

Basing your strategy on A/B testing and rigorous data analysis will make your organization more competitive, agile, and most importantly, you’ll have high-quality data-backed feedback on how your customers feel about your service.

Implementing an A/B testing platform will give you new, powerful insights into your customer’s journey and offer more tangible benefits, like reduced bounce rates and increased conversion rates.

But how do you choose an experimentation platform that fits your needs and your team’s skill set?

Kameleoon’s A/B Testing Checklist gives you the information you need to make the right choice.

Usually, you’re testing two versions: your original version, the A version—also called the control—against the modified B version you hypothesize will perform better. Before building your A/B test, you decide what metrics to measure so you can quantify what “better” means in your test results.

An A/B test presents multiple versions of a webpage or an app to users to determine which version leads to more positive outcomes. A/B testing is a relatively easy way to improve user engagement, offer more engaging content, reduce bounce rates, and improve conversion rates.

Every time you conduct an A/B test, you learn more about how your customers engage with your site or app. Over time, a comprehensive testing program creates a feedback loop making your content more and more effective and providing a foundation for new, even more insightful tests.

A/B testing can be as simple or as complex as you want. For example, you can conduct simple version tests where you compare the effectiveness of a new B version against an original A version. You can also conduct multivariate tests (MVT) where you compare the effectiveness of different combinations of changes. You could also test three or more variations simultaneously, called A/B/n testing. If you can change your codebase that modifies your user experience, there is a way to test it.

A/B testing is an incredibly versatile method for generating insights. It can be valuable to many teams, including marketing, product, and growth teams. Marketing teams can construct A/B tests to reveal which campaigns lead customers down their funnel effectively. Product teams can test user retention and engagement. Growth teams can easily use A/B tests to evaluate different components of their customer journey.

People use A/B testing to compare two versions of a webpage, app feature, or other marketing materials to see which one performs better. This experimentation method helps businesses make data-driven decisions by showing them which version leads to higher engagement, conversions, or other desired outcomes.

For example, in healthcare marketing, A/B testing can help determine which call-to-action (CTA) copy gets more patients to book an appointment online, ultimately improving communication and patient engagement. By testing different elements and analyzing the results, organizations can optimize their strategies to better meet their goals.

A/B testing is quantitative. The experimentation method involves comparing numerical data from two versions of click-through rates or conversion rates, to see which one performs better. This method uses statistics to enable data-backed decisions.

Usability testing focuses on how real users interact with a product to identify any issues or areas for improvement. The objective of thisThe objective of this testing method is to understand user behavior and get qualitative feedback.

A/B testing, on the other hand, compares two versions of a product, product feature, or web page to see which one performs better based on quantitative data, like conversion rates or click-through rates. In short, usability testing helps make a product easier to use, while A/B testing helps optimize its performance.

A/B testing is a specific type of hypothesis testing where you compare two versions of something to see which one performs better. Hypothesis testing is a broader concept used in statistics to determine if there is enough evidence to support a specific hypothesis.

In A/B testing, your hypothesis might be that version B of a webpage will get more clicks than version A. You then run the test to see if the data supports this hypothesis.

Yes, A/B testing is a type of controlled experiment. In A/B testing, you create two (or more) versions of a variable and randomly assign users to each version to control external factors. This way, any differences in outcomes can be attributed to the changes you made, making it a controlled and reliable method for testing.

A/B testing and split testing are often used interchangeably, but they can have slightly different meanings.

A/B testing typically involves comparing two versions (A and B) of a single element to see which one performs better. Split testing, on the other hand, can involve comparing multiple versions of multiple elements at once. So, while all A/B tests are split tests, not all split tests are A/B tests.

A/B testing is used by a wide range of professionals, including marketers, web developers, product managers, product developers, and healthcare providers, and industries, including large ecommerce companies or banks, for example. Anyone looking to optimize their digital content, improve user experience, or make data-driven decisions can benefit from A/B testing.

A/B testing should not be used for making major design or strategy changes without prior research. This testing method is best suited for testing small, incremental changes. Additionally, A/B testing shouldn’t be used when there’s insufficient traffic to gather reliable results.

- Graphical editor for codeless test-building

- Customizable user segmentation tools

- Built-in widget library

- Simulation tool to evaluate test parameters

- Comparative analysis tools

- Report sharing

- Decision support systems

Do you have the skills available to carry out all of those tests? The higher the volume and greater the complexity of tests you want to conduct, the more likely you will benefit from using a testing solution like Kameleoon Hybrid.