The Vision API can detect and transcribe text from PDF and TIFF files stored in Cloud Storage.

Document text detection from PDF and TIFF must be requested using the

files:asyncBatchAnnotate function, which performs an offline (asynchronous)

request and provides its status using the operations resources.

Output from a PDF/TIFF request is written to a JSON file created in the specified Cloud Storage bucket.

Limitations

The Vision API accepts PDF/TIFF files up to 2000 pages. Larger files will return an error.

Authentication

API keys are not supported for files:asyncBatchAnnotate requests. See

Using a service account for

instructions on authenticating with a service account.

The account used for authentication must have access to the Cloud Storage

bucket that you specify for the output (roles/editor or

roles/storage.objectCreator or above).

You can use an API key to query the status of the operation; see Using an API key for instructions.

Document text detection requests

Currently PDF/TIFF document detection is only available for files stored in Cloud Storage buckets. Response JSON files are similarly saved to a Cloud Storage bucket.

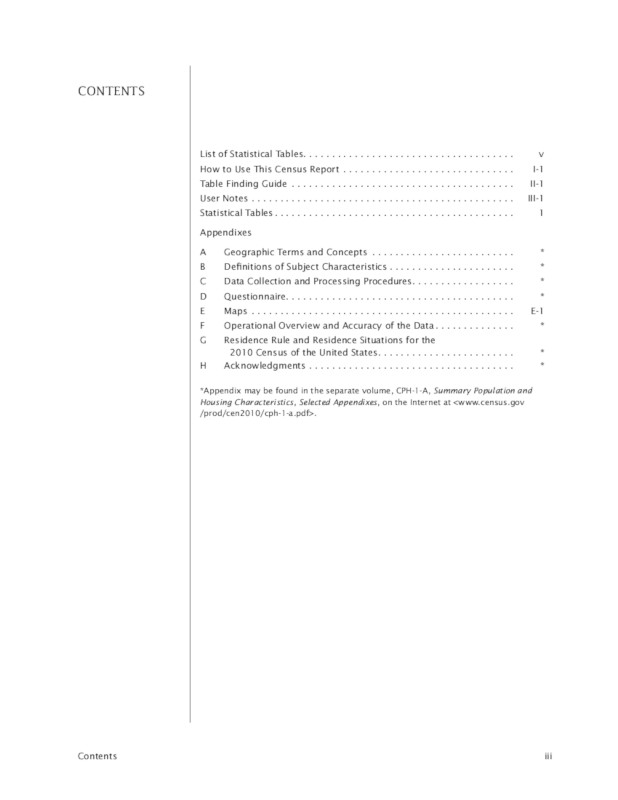

gs://cloud-samples-data/vision/pdf_tiff/census2010.pdf,

Source:

United States

Census Bureau.

REST

Before using any of the request data, make the following replacements:

- CLOUD_STORAGE_BUCKET: A Cloud Storage

bucket/directory to save output files to, expressed in the following form:

gs://bucket/directory/

- CLOUD_STORAGE_FILE_URI: the path to a valid

file (PDF/TIFF) in a Cloud Storage bucket. You must at least have read privileges to

the file.

Example:

gs://cloud-samples-data/vision/pdf_tiff/census2010.pdf

- FEATURE_TYPE: A valid feature type.

For

files:asyncBatchAnnotaterequests you can use the following feature types:DOCUMENT_TEXT_DETECTIONTEXT_DETECTION

- PROJECT_ID: Your Google Cloud project ID.

Field-specific considerations:

inputConfig- replaces theimagefield used in other Vision API requests. It contains two child fields:gcsSource.uri- the Google Cloud Storage URI of the PDF or TIFF file (accessible to the user or service account making the request).mimeType- one of the accepted file types:application/pdforimage/tiff.

outputConfig- specifies output details. It contains two child field:gcsDestination.uri- a valid Google Cloud Storage URI. The bucket must be writeable by the user or service account making the request. The filename will beoutput-x-to-y, wherexandyrepresent the PDF/TIFF page numbers included in that output file. If the file exists, its contents will be overwritten.batchSize- specifies how many pages of output should be included in each output JSON file.

HTTP method and URL:

POST https://vision.googleapis.com/v1/files:asyncBatchAnnotate

Request JSON body:

{

"requests":[

{

"inputConfig": {

"gcsSource": {

"uri": "CLOUD_STORAGE_FILE_URI"

},

"mimeType": "application/pdf"

},

"features": [

{

"type": "FEATURE_TYPE"

}

],

"outputConfig": {

"gcsDestination": {

"uri": "CLOUD_STORAGE_BUCKET"

},

"batchSize": 1

}

}

]

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "x-goog-user-project: PROJECT_ID" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://vision.googleapis.com/v1/files:asyncBatchAnnotate"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred"; "x-goog-user-project" = "PROJECT_ID" }

Invoke-WebRequest `

-Method POST `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://vision.googleapis.com/v1/files:asyncBatchAnnotate" | Select-Object -Expand Content

A successful asyncBatchAnnotate request returns a response with a single name

field:

{ "name": "projects/usable-auth-library/operations/1efec2285bd442df" }

This name represents a long-running operation with an associated ID

(for example, 1efec2285bd442df), which can be queried using the

v1.operations API.

To retrieve your Vision annotation response, send a GET request to the

v1.operations endpoint, passing the operation ID in the URL:

GET https://vision.googleapis.com/v1/operations/operation-idFor example:

curl -X GET -H "Authorization: Bearer $(gcloud auth application-default print-access-token)" \ -H "Content-Type: application/json" \ https://vision.googleapis.com/v1/projects/project-id/locations/location-id/operations/1efec2285bd442df

If the operation is in progress:

{ "name": "operations/1efec2285bd442df", "metadata": { "@type": "type.googleapis.com/google.cloud.vision.v1.OperationMetadata", "state": "RUNNING", "createTime": "2019-05-15T21:10:08.401917049Z", "updateTime": "2019-05-15T21:10:33.700763554Z" } }

Once the operation has completed, the state shows as DONE and your

results are written to the Google Cloud Storage file you specified:

{ "name": "operations/1efec2285bd442df", "metadata": { "@type": "type.googleapis.com/google.cloud.vision.v1.OperationMetadata", "state": "DONE", "createTime": "2019-05-15T20:56:30.622473785Z", "updateTime": "2019-05-15T20:56:41.666379749Z" }, "done": true, "response": { "@type": "type.googleapis.com/google.cloud.vision.v1.AsyncBatchAnnotateFilesResponse", "responses": [ { "outputConfig": { "gcsDestination": { "uri": "gs://your-bucket-name/folder/" }, "batchSize": 1 } } ] } }

The JSON in your output file is similar to that of an image's

[document text detection request](/vision/docs/ocr), with the addition of a

context field showing the location of the PDF or TIFF that was specified and

the number of pages in the file:

output-1-to-1.json

Go

Before trying this sample, follow the Go setup instructions in the Vision quickstart using client libraries. For more information, see the Vision Go API reference documentation.

To authenticate to Vision, set up Application Default Credentials. For more information, see Set up authentication for a local development environment.

Java

Before trying this sample, follow the Java setup instructions in the Vision API Quickstart Using Client Libraries. For more information, see the Vision API Java reference documentation.

Node.js

Before trying this sample, follow the Node.js setup instructions in the Vision quickstart using client libraries. For more information, see the Vision Node.js API reference documentation.

To authenticate to Vision, set up Application Default Credentials. For more information, see Set up authentication for a local development environment.

Python

Before trying this sample, follow the Python setup instructions in the Vision quickstart using client libraries. For more information, see the Vision Python API reference documentation.

To authenticate to Vision, set up Application Default Credentials. For more information, see Set up authentication for a local development environment.

gcloud

The gcloud command you use depend on the file type.

To perform PDF text detection, use the

gcloud ml vision detect-text-pdfcommand as shown in the following example:gcloud ml vision detect-text-pdf gs://my_bucket/input_file gs://my_bucket/out_put_prefix

To perform TIFF text detection, use the

gcloud ml vision detect-text-tiffcommand as shown in the following example:gcloud ml vision detect-text-tiff gs://my_bucket/input_file gs://my_bucket/out_put_prefix

Additional languages

C#: Please follow the C# setup instructions on the client libraries page and then visit the Vision reference documentation for .NET.

PHP: Please follow the PHP setup instructions on the client libraries page and then visit the Vision reference documentation for PHP.

Ruby: Please follow the Ruby setup instructions on the client libraries page and then visit the Vision reference documentation for Ruby.

Multi-regional support

You can now specify continent-level data storage and OCR processing. The following regions are currently supported:

us: USA country onlyeu: The European Union

Locations

Cloud Vision offers you some control over where the resources for your project are stored and processed. In particular, you can configure Cloud Vision to store and process your data only in the European Union.

By default Cloud Vision stores and processes resources in a Global location, which means that Cloud Vision doesn't guarantee that your resources will remain within a particular location or region. If you choose the European Union location, Google will store your data and process it only in the European Union. You and your users can access the data from any location.

Setting the location using the API

The Vision API supports a global API endpoint (vision.googleapis.com) and also

two region-based endpoints: a European Union endpoint

(eu-vision.googleapis.com) and United States

endpoint (us-vision.googleapis.com). Use these endpoints for region-specific

processing. For example, to store and process your data in the European Union only, use the

URI eu-vision.googleapis.com in place of vision.googleapis.com

for your REST API calls:

- https://eu-vision.googleapis.com/v1/projects/PROJECT_ID/locations/eu/images:annotate

- https://eu-vision.googleapis.com/v1/projects/PROJECT_ID/locations/eu/images:asyncBatchAnnotate

- https://eu-vision.googleapis.com/v1/projects/PROJECT_ID/locations/eu/files:annotate

- https://eu-vision.googleapis.com/v1/projects/PROJECT_ID/locations/eu/files:asyncBatchAnnotate

To store and process your data in the United States only, use the US endpoint

(us-vision.googleapis.com) with the preceding methods.

Setting the location using the client libraries

The Vision API client libraries accesses the global API endpoint

(vision.googleapis.com) by default. To store and process your data in the

European Union only, you need to explicitly set the endpoint

(eu-vision.googleapis.com). The following code samples show how to configure

this setting.

REST

Before using any of the request data, make the following replacements:

- REGION_ID: One of the valid regional

location identifiers:

us: USA country onlyeu: The European Union

- CLOUD_STORAGE_IMAGE_URI: the path to a valid

image file in a Cloud Storage bucket. You must at least have read privileges to the file.

Example:

gs://cloud-samples-data/vision/pdf_tiff/census2010.pdf

- CLOUD_STORAGE_BUCKET: A Cloud Storage

bucket/directory to save output files to, expressed in the following form:

gs://bucket/directory/

- FEATURE_TYPE: A valid feature type.

For

files:asyncBatchAnnotaterequests you can use the following feature types:DOCUMENT_TEXT_DETECTIONTEXT_DETECTION

- PROJECT_ID: Your Google Cloud project ID.

Field-specific considerations:

inputConfig- replaces theimagefield used in other Vision API requests. It contains two child fields:gcsSource.uri- the Google Cloud Storage URI of the PDF or TIFF file (accessible to the user or service account making the request).mimeType- one of the accepted file types:application/pdforimage/tiff.

outputConfig- specifies output details. It contains two child field:gcsDestination.uri- a valid Google Cloud Storage URI. The bucket must be writeable by the user or service account making the request. The filename will beoutput-x-to-y, wherexandyrepresent the PDF/TIFF page numbers included in that output file. If the file exists, its contents will be overwritten.batchSize- specifies how many pages of output should be included in each output JSON file.

HTTP method and URL:

POST https://REGION_ID-vision.googleapis.com/v1/projects/PROJECT_ID/locations/REGION_ID/files:asyncBatchAnnotate

Request JSON body:

{

"requests":[

{

"inputConfig": {

"gcsSource": {

"uri": "CLOUD_STORAGE_IMAGE_URI"

},

"mimeType": "application/pdf"

},

"features": [

{

"type": "FEATURE_TYPE"

}

],

"outputConfig": {

"gcsDestination": {

"uri": "CLOUD_STORAGE_BUCKET"

},

"batchSize": 1

}

}

]

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "x-goog-user-project: PROJECT_ID" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://REGION_ID-vision.googleapis.com/v1/projects/PROJECT_ID/locations/REGION_ID/files:asyncBatchAnnotate"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred"; "x-goog-user-project" = "PROJECT_ID" }

Invoke-WebRequest `

-Method POST `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://REGION_ID-vision.googleapis.com/v1/projects/PROJECT_ID/locations/REGION_ID/files:asyncBatchAnnotate" | Select-Object -Expand Content

A successful asyncBatchAnnotate request returns a response with a single name

field:

{ "name": "projects/usable-auth-library/operations/1efec2285bd442df" }

This name represents a long-running operation with an associated ID

(for example, 1efec2285bd442df), which can be queried using the

v1.operations API.

To retrieve your Vision annotation response, send a GET request to the

v1.operations endpoint, passing the operation ID in the URL:

GET https://vision.googleapis.com/v1/operations/operation-idFor example:

curl -X GET -H "Authorization: Bearer $(gcloud auth application-default print-access-token)" \ -H "Content-Type: application/json" \ https://vision.googleapis.com/v1/projects/project-id/locations/location-id/operations/1efec2285bd442df

If the operation is in progress:

{ "name": "operations/1efec2285bd442df", "metadata": { "@type": "type.googleapis.com/google.cloud.vision.v1.OperationMetadata", "state": "RUNNING", "createTime": "2019-05-15T21:10:08.401917049Z", "updateTime": "2019-05-15T21:10:33.700763554Z" } }

Once the operation has completed, the state shows as DONE and your

results are written to the Google Cloud Storage file you specified:

{ "name": "operations/1efec2285bd442df", "metadata": { "@type": "type.googleapis.com/google.cloud.vision.v1.OperationMetadata", "state": "DONE", "createTime": "2019-05-15T20:56:30.622473785Z", "updateTime": "2019-05-15T20:56:41.666379749Z" }, "done": true, "response": { "@type": "type.googleapis.com/google.cloud.vision.v1.AsyncBatchAnnotateFilesResponse", "responses": [ { "outputConfig": { "gcsDestination": { "uri": "gs://your-bucket-name/folder/" }, "batchSize": 1 } } ] } }

The JSON in your output file is similar to that of an image's

document text detection

response if you used the DOCUMENT_TEXT_DETECTION feature, or

text detection response

if you used the TEXT_DETECTION feature. The output will have an additional

context field showing the location of the PDF or TIFF that was specified and

the number of pages in the file:

output-1-to-1.json

Go

Before trying this sample, follow the Go setup instructions in the Vision quickstart using client libraries. For more information, see the Vision Go API reference documentation.

To authenticate to Vision, set up Application Default Credentials. For more information, see Set up authentication for a local development environment.

Java

Before trying this sample, follow the Java setup instructions in the Vision API Quickstart Using Client Libraries. For more information, see the Vision API Java reference documentation.

Node.js

Before trying this sample, follow the Node.js setup instructions in the Vision quickstart using client libraries. For more information, see the Vision Node.js API reference documentation.

To authenticate to Vision, set up Application Default Credentials. For more information, see Set up authentication for a local development environment.

Python

Before trying this sample, follow the Python setup instructions in the Vision quickstart using client libraries. For more information, see the Vision Python API reference documentation.

To authenticate to Vision, set up Application Default Credentials. For more information, see Set up authentication for a local development environment.

Try it for yourself

If you're new to Google Cloud, create an account to evaluate how Cloud Vision API performs in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

Try Cloud Vision API free